Policies in the cloud define how resources are managed, what they can do, who has access to them, and the conditions under which they operate. Typically, these rules are applied using tools like the AWS Management Console, GCP IAM, Azure RBAC, or custom scripts written for the specific needs of the organization. While these methods work, they can become inconsistent as your environments scale, especially when different teams manage resources in their own ways.

Policy as Code offers a solution by allowing policies to be written as structured files, often in formats like JSON or YAML. This approach is especially useful in multi-cloud setups with AWS, GCP, and Azure. Each cloud provider has its own way of enforcing policies, which can lead to gaps or conflicting standards. Policy as Code creates a single, consistent way to apply rules across all cloud platforms. It also integrates well into CI/CD pipelines, making sure that non-compliant resources never get deployed, saving time and effort in fixing issues later.

Several tools make Policy as Code possible. Open Policy Agent (OPA) is commonly used with Kubernetes to manage workloads and access control. AWS Config monitors AWS resources to enforce rules like enabling encryption or restricting instance types. Azure Policy applies similar checks within Azure, and GCP Organization Policies provide ways to enforce resource restrictions and permissions.

By using Policy as Code, teams can simplify how they enforce rules, maintain consistency across environments, and improve security and compliance.

Core Elements of Policy as Code Implementation

Implementing Policy as Code involves more than just writing policies. It requires a framework that ensures policies are applied consistently, tested thoroughly, and monitored for compliance. This framework can be broken down into four main components:

Policy Definition

This is where policies are written in a structured format, typically using JSON, YAML, or a domain-specific language like Rego for tools such as Open Policy Agent. The goal is to define the rules you want to enforce clearly. For example, you might write a policy to enforce resource tagging, restrict instance sizes, or require encryption for storage. These definitions should be stored in version control systems like Git to ensure traceability and collaboration among all the teams.

Enforcement

Policies are only effective when they are applied to resources. Enforcement mechanisms make sure that the rules defined in your policies are followed. Tools like AWS Config, Azure Policy, and GCP Organization Policies are designed to automatically evaluate resources and take action when a rule is violated. Actions could include blocking the creation of non-compliant resources or notifying administrators about these violations.

Testing

Before enforcing policies in a production environment, it’s important to test them. This ensures that the rules act as expected and don’t disrupt your workflows. Tools like Conftest for OPA or Terraform Cloud’s policy checks allow you to simulate policy enforcement in a controlled environment. Testing policies in CI/CD pipelines can also catch issues early in the deployment process.

Monitoring

Even with policies in place, ongoing monitoring is necessary to ensure compliance within your infrastructure. Monitoring involves tracking resource configurations over time, logging violations, and generating reports. For example, AWS Config provides a dashboard to review compliance across all resources, while Azure Policy and GCP logging tools offer similar insights for their platforms. Continuous monitoring helps identify trends and adjust policies as needed.

By combining these elements, you create a strong framework for Policy as Code that not only enforces rules but also adapts to the changing needs of your cloud environment.

Implementing Policy as Code in AWS, GCP, and Azure

Now that we understand how OPA can be used as a centralized tool for enforcing policies, let’s dive into its practical application. We'll use OPA to validate policies for AWS, GCP, and Azure at the planning stage. This makes sure that non-compliant resources are caught early, preventing costly fixes later. We’ll also include Terraform configurations and commands to set everything up, so you can see the process in action.

AWS: Validating Tagging Policies

For AWS, let’s enforce a policy that requires all EC2 instances to include a department tag. First, we’ll define a Terraform configuration to create an EC2 instance.

Save the following code to a file named aws_instance.tf:

This configuration defines an EC2 instance with a Name tag but no department tag, making it non-compliant with our policy.

Next, create a Rego file named aws_tags_policy.rego:

This policy checks each resource in the terraform plan. If it finds an EC2 instance (aws_instance) without the department tag, it generates an error message.

Now that we’ve written the Terraform configuration and the OPA policy for AWS, let’s move on to validating it. The goal is to catch policy violations before deploying the resources, making sure that we don’t create non-compliant infrastructure.

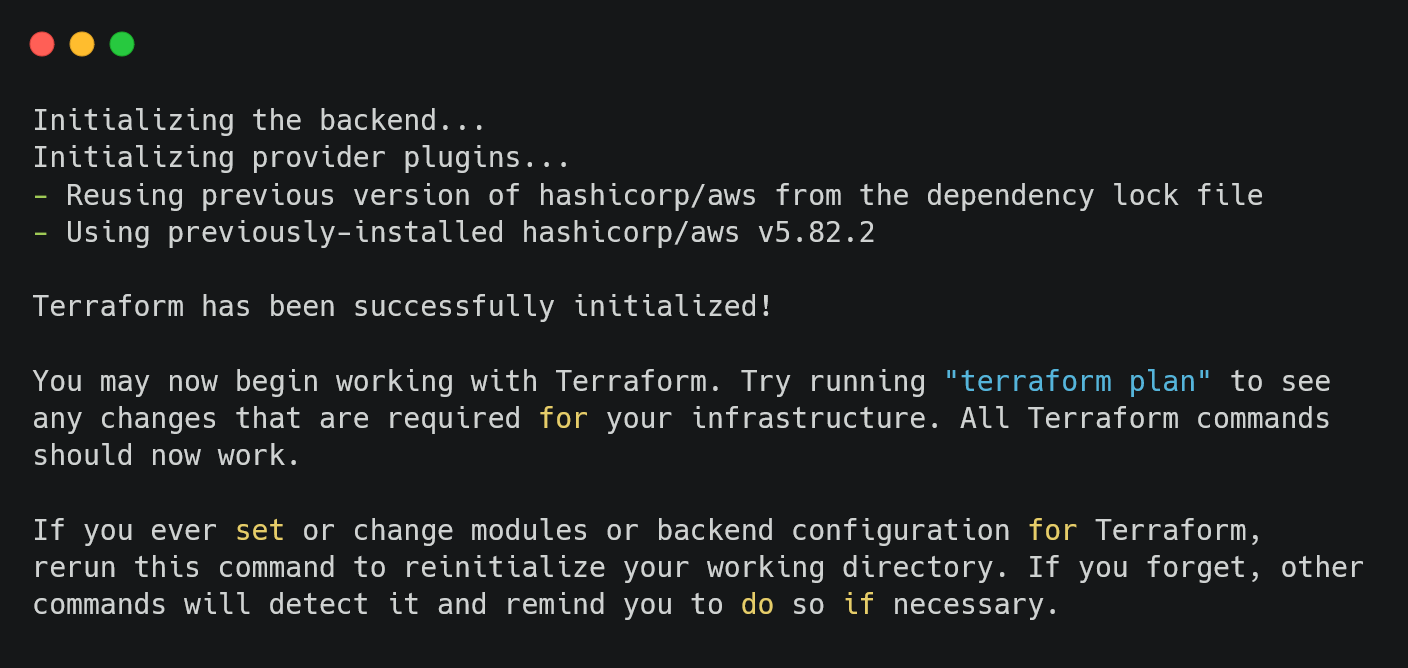

The first step in our validation process is to initialize Terraform. This makes sure that all necessary provider plugins are downloaded, and your workspace is set up correctly. To do this, run:

This command will download the AWS provider plugin and prepare your Terraform environment. You should see output confirming the initialization, including details about the plugins downloaded.

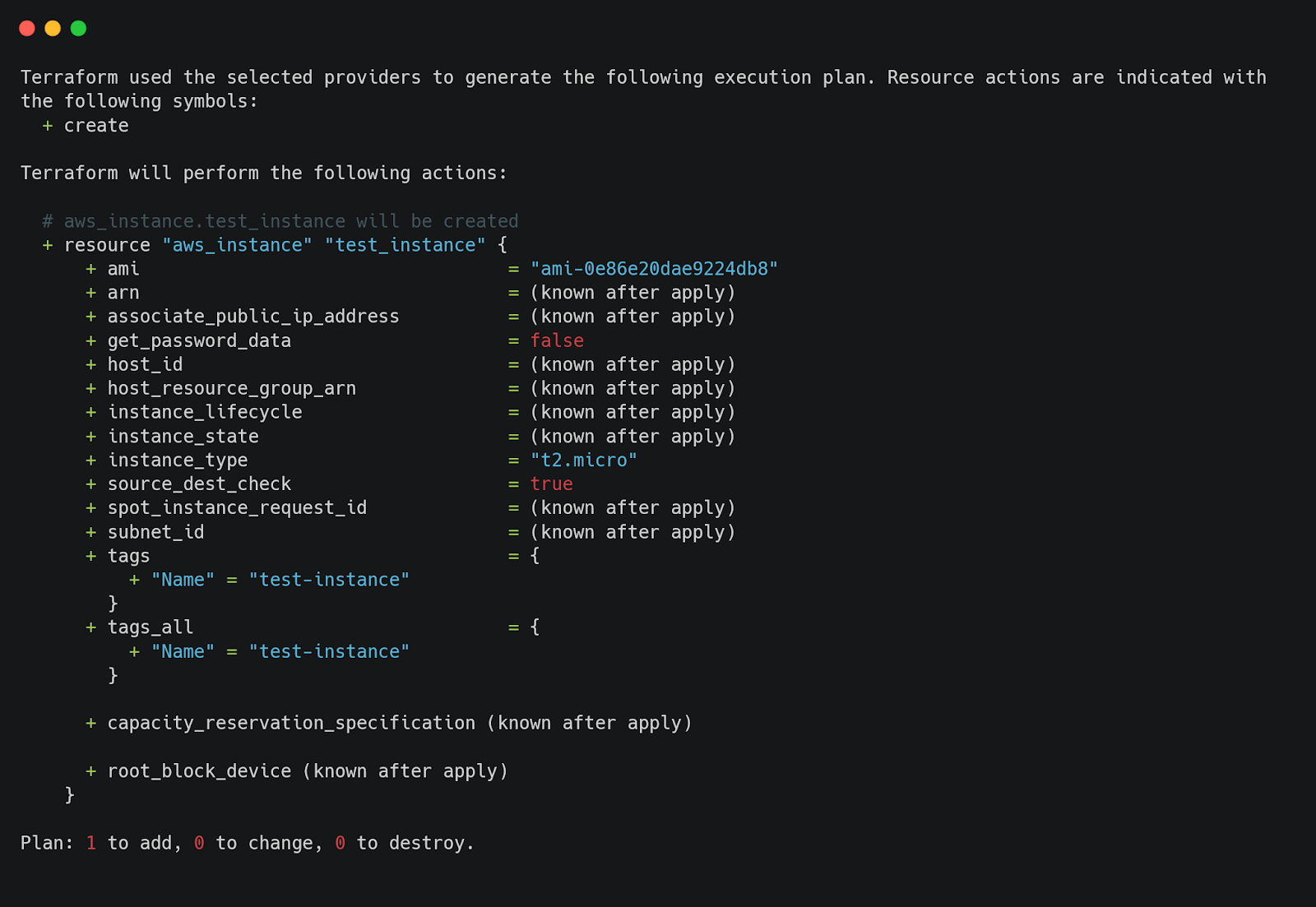

Once Terraform is initialized, we need to create a plan. The plan outlines the resources Terraform will create, but we’ll also use it for validation. Run the following command to generate the plan:

This command saves the plan to a file named tfplan. The plan will include details about the EC2 instance defined in our configuration.

To validate the plan with OPA, we need to convert it into JSON format, as OPA works with structured data. Use this command:

This command converts the binary plan file into a JSON file named tfplan.json. This JSON file contains all the resource details that OPA will evaluate.

Finally, we run the OPA validation. This step checks the Terraform plan against the OPA policy we wrote earlier. Use this command:

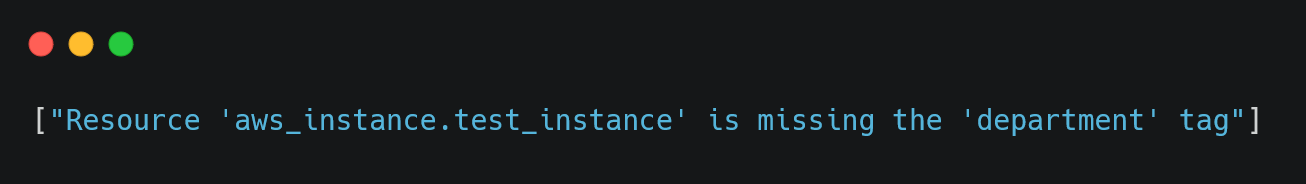

If the policy works correctly, you should see an error message like this:

This output confirms that our OPA policy caught the missing department tag.

If no violations are found, the output will be empty, meaning the configuration complies with the policy.

After successfully validating policies for AWS, let’s move on to implementing Policy as Code for GCP and Azure. We’ll follow a similar approach; defining Terraform configurations, writing OPA policies, and validating the plans. However, we’ll keep the focus on what’s unique to each platform to avoid repetition.

GCP: Restricting VM Types

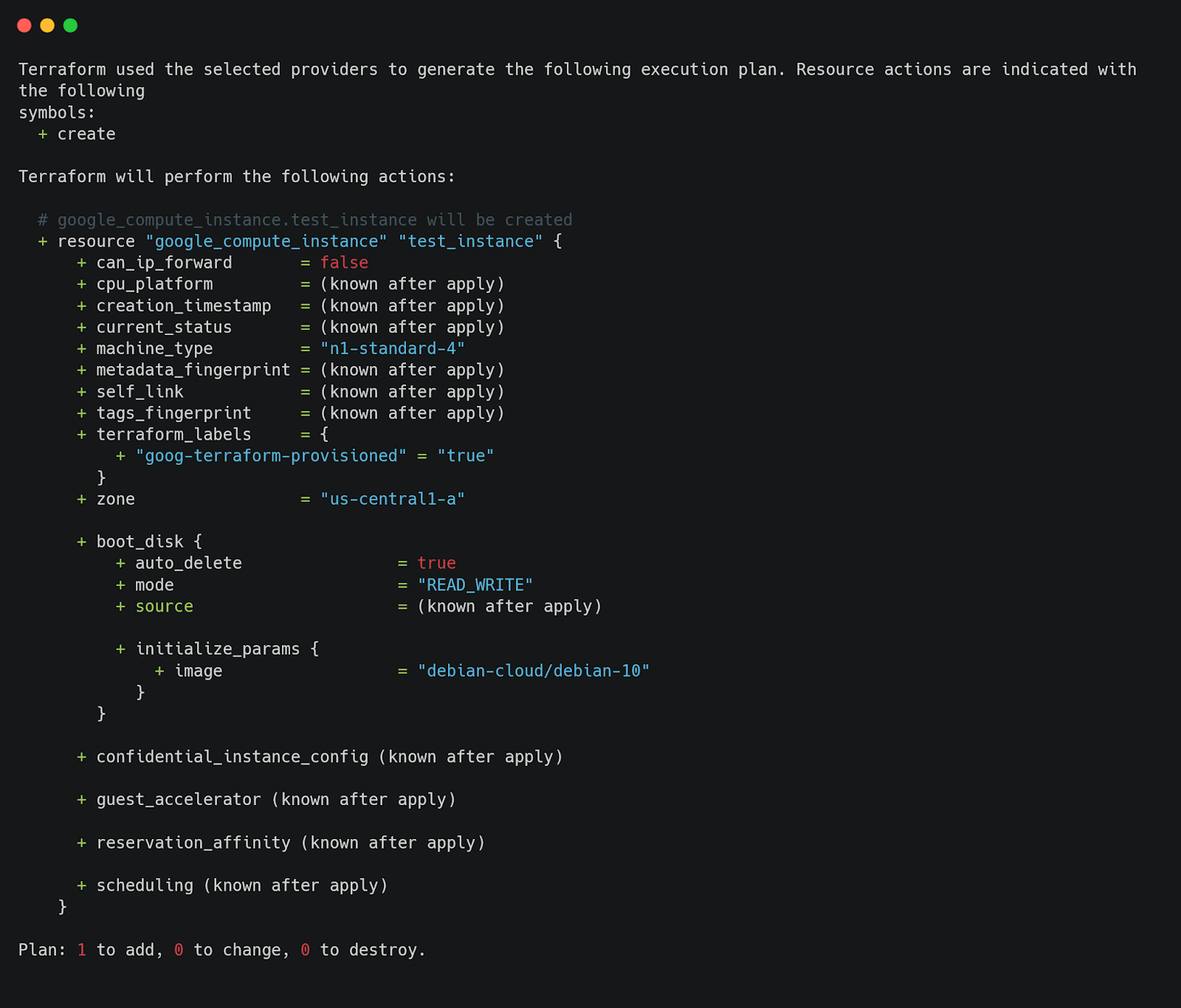

For GCP, let’s enforce a policy to make sure that only approved machine types are used for VM instances. The first step is to define a Terraform configuration for creating a VM instance. Save this code to a file named gcp_instance.tf:

This configuration creates a VM instance with the machine type n1-standard-4, which we’ll mark as non-compliant in our policy.

Next, create a policy in a file named gcp_vm_policy.rego. This policy checks whether the VM instance uses an approved machine type.

This policy ensures that only the machine types n1-standard-1 and n1-standard-2 are allowed.

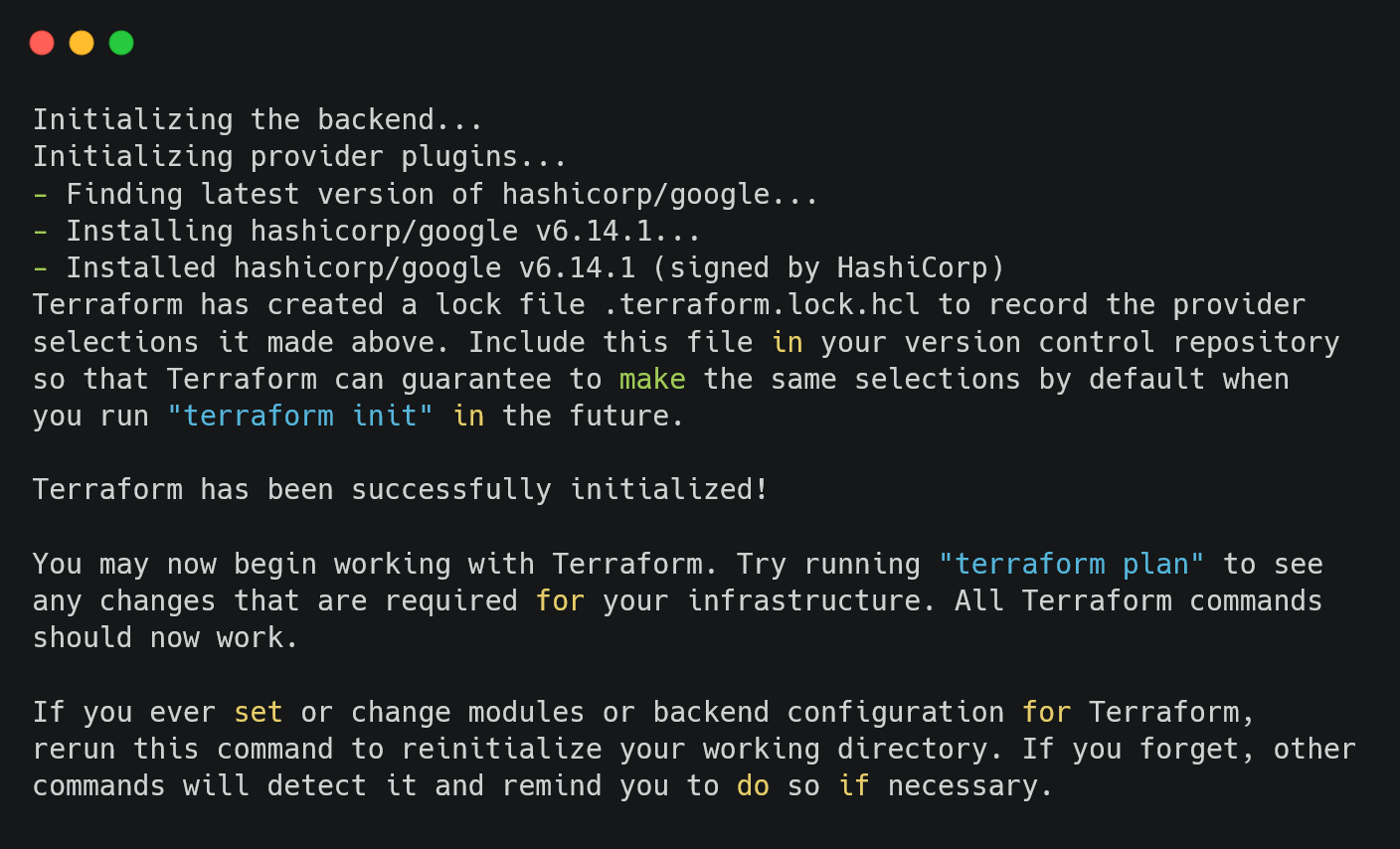

Now, let’s move to validation. The first step is to prepare the Terraform workspace. Run the following command:

This initializes the workspace and downloads the necessary provider plugins for Google Cloud. You should see confirmation messages indicating that Terraform is ready to use.

Now, Run the following commands to create a plan and convert it to JSON:

Evaluate the plan using OPA:

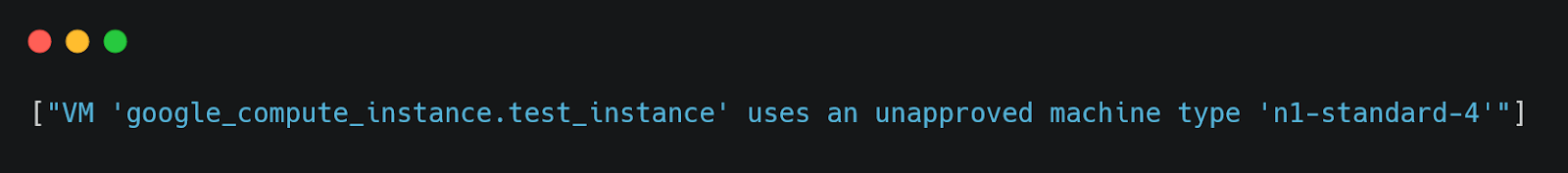

If the policy catches violations, the output will look like this:

Azure: Validating Tagging Policies

Moving to Azure, our goal is to enforce tagging policies for resource groups. Specifically, we want all resource groups to include an environment tag, ensuring clarity and compliance with organizational standards.x

Save the following Terraform configuration as azure_resource_group.tf:

This configuration defines a resource group with only the Name tag, making it non-compliant with our policy.

To enforce the environment tag, save the following policy as azure_tags_policy.rego:

This policy flags any resource group that does not include the required environment tag.

Now, run:

Evaluate the plan:

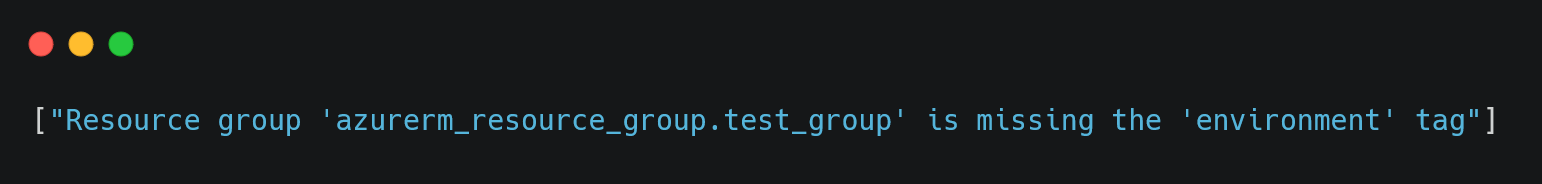

If a violation is detected, OPA will produce output like this:

Empty output means the configuration complies with the policy.

With these steps, we’ve validated policies for AWS, GCP, and Azure using Terraform and OPA. Each cloud platform presents unique requirements, but OPA allows us to enforce consistent policies across environments. By catching non-compliance at the planning stage, we can avoid deploying resources that violate organizational standards, ensuring secure and well-governed infrastructure.

Best Practices for Implementing and Managing Policy as Code

Now that we’ve explored how to validate policies across AWS, GCP, and Azure using OPA, it’s important to establish practices that make Policy as Code sustainable and effective. These practices focus on maintaining consistency across cloud providers, embedding validation into your workflows, and continuously improving policies to meet evolving requirements.

Standardizing Policies Across Cloud Providers

Consistency is one of the most important challenges in multi-cloud environments. Each cloud provider has its own way of defining and enforcing policies, which can create gaps or overlaps. To address this, policies should be standardized as much as possible. Using tools like OPA, you can define policies in a provider-agnostic way, ensuring the same rules apply across AWS, GCP, and Azure. For example, a tagging policy that enforces tags like environment or owner can be written once in Rego and applied uniformly to resources in all three platforms. This approach reduces complexity and makes it easier to manage policies at scale.

Centralizing policies in a version-controlled repository like Git makes sure that changes are traceable and can go through proper reviews before being implemented. This not only helps teams collaborate effectively but also creates an audit trail that’s useful for compliance purposes. When policies are stored centrally, they’re easier to update, test, and deploy across environments, keeping them consistent and aligned with organizational standards.

Integrating Policy Validation with CI/CD Workflows

Embedding policy validation into CI/CD pipelines is important for catching violations before they reach production. By integrating OPA checks directly into your pipelines, you make sure that every change to your infrastructure is evaluated against the defined policies. For example, a Terraform plan can be generated as part of the CI pipeline, converted to JSON, and validated using OPA. If any violations are detected, the pipeline can fail the build, preventing non-compliant resources from being deployed.

This process doesn’t just catch errors; it also reinforces good practices among developers by providing immediate feedback. Developers can run these checks locally before committing their code, reducing iteration cycles and ensuring compliance early in the development lifecycle. Standardizing these pipeline configurations across teams helps maintain uniformity, regardless of specific workflows or tools being used. For example, a shared CI/CD template can include predefined steps for generating plans, running OPA evaluations, and handling violations, ensuring no team skips these important checks.

Continuous Improvement Through Auditing and Feedback

Policies are not static. As your organization grows and regulatory requirements change, policies need to evolve to address new challenges. Continuous improvement starts with monitoring compliance post-deployment. Even with pre-deployment checks, real-world conditions can lead to unexpected deviations. Tools like AWS Config, Azure Policy, or GCP’s Cloud Logging provide valuable insights into the compliance status of deployed resources. By regularly analyzing this data, you can identify trends, such as recurring violations or areas where policies might need to be refined.

Audit logs from OPA validations and CI/CD pipelines are another important source of feedback. They can highlight patterns, such as certain teams frequently missing required tags or using non-approved configurations. Using this data, you can update your policies to address these gaps and prevent future violations. Automating parts of this feedback loop, such as notifying teams about violations or suggesting remediation actions, can further simplify the process. For example, if an audit reveals a common issue like missing encryption, you can adjust your policies to enforce encryption and notify developers to align with the updated standards.

By adopting these practices, you can make sure that your infrastructure stays secure, compliant, and aligned with organizational goals, even in complex cloud environments.

Now, managing policies across multi-cloud environments can be a bit difficult, especially when dealing with inconsistencies, compliance gaps, or unmanaged resources. Firefly simplifies this process by providing a unified platform for cloud governance. Whether you’re using AWS, GCP, or Azure, Firefly integrates easily to help you enforce policies, monitor compliance, and maintain control over your infrastructure.

Firefly for Cloud Governance

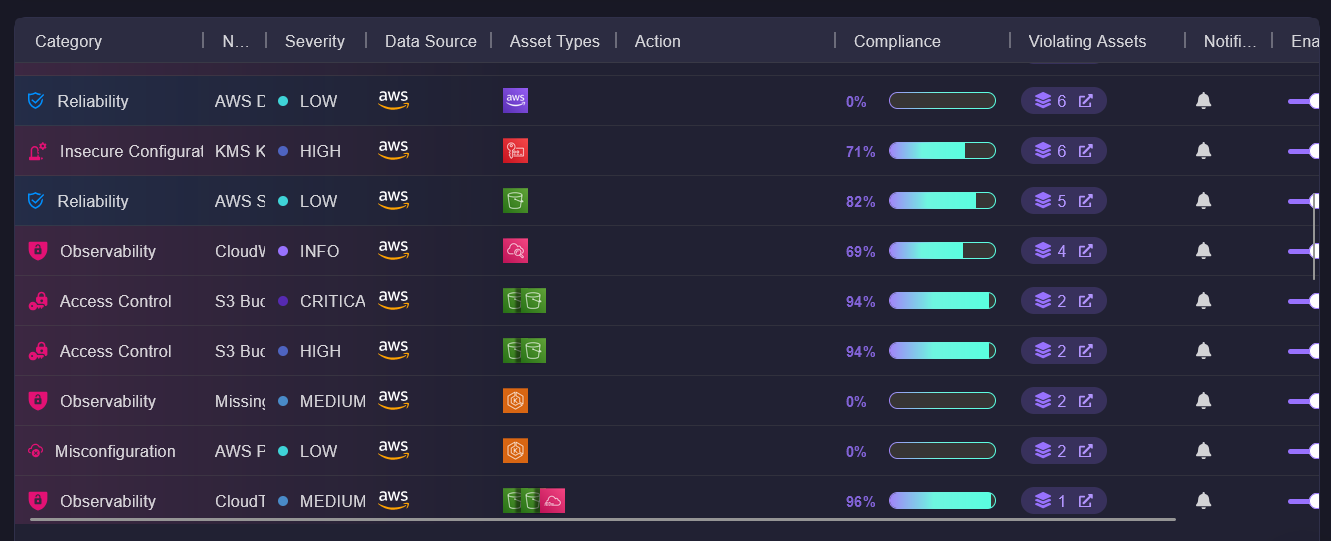

Firefly scans your environment to identify unmanaged assets, compliance issues, and policy violations, making it easier to address gaps. For example, it highlights missing tags, unencrypted storage, or misconfigured networking rules, ensuring that nothing is missed across accounts or projects.

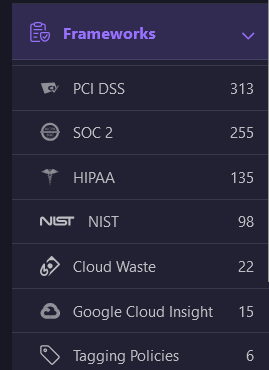

Firefly also simplifies policy enforcement with built-in frameworks like PCI DSS, SOC 2, and HIPAA. These ready-to-use rules help meet regulatory requirements without needing to build everything from scratch. It ensures that data is encrypted, access controls are in place, and tagging standards are followed, saving time while keeping your environment organized and compliant.

To make things easier to manage, Firefly organizes checks into categories like access control, encryption, and networking. This lets you focus on specific areas such as securing access, ensuring proper monitoring, or fixing misconfigurations. The dashboard provides clear insights into compliance status, breaking down violations by category and helping you prioritize what needs attention.

By connecting to your cloud providers, scanning resources, and offering tools to enforce policies, Firefly helps you stay on top of governance. It reduces effort from your end, ensures consistency, and gives you control over your infrastructure without leaving any gaps.

Frequently Asked Questions

What is Policy as Code in AWS?

Policy as Code in AWS refers to defining and enforcing policies as machine-readable code, using tools like AWS Config, Lambda, or Open Policy Agent (OPA) to automate governance and compliance. It ensures consistent enforcement of rules across AWS resources.

What are the benefits of Policy as Code?

Policy as Code improves consistency, automates compliance, reduces manual errors, and ensures security by enforcing policies at scale during resource creation and modifications.

What is the maximum number of policies in AWS?

AWS allows a maximum of 10,000 managed policies per account, including both AWS-managed and customer-managed policies.

How many types of policy are there in AWS?

AWS supports six types of policies: identity-based, resource-based, permission boundaries, service control, access control lists (ACLs), and session policies.

How many policies can I attach to an IAM role?

You can attach up to 10 managed policies (AWS-managed or customer-managed) to an IAM role.