What is Pulumi?

Pulumi is a modern IaC platform founded in 2017 that enables you to create, manage, and deploy cloud resources using commonly used programming languages, such as JavaScript, TypeScript, Python, Go, and C#. Unlike traditional IaC tools like Terraform or AWS CloudFormation, which use domain-specific languages such as HCL or YAML, Pulumi allows you to write infrastructure using popular programming languages.

Supports Multi-Cloud

Pulumi provides support for all major cloud providers like AWS, Azure, Google Cloud, and Kubernetes. This means you can manage resources across multiple clouds in a unified way using the same programming languages. You could use Pulumi to deploy infrastructure on AWS for your EKS cluster while simultaneously deploying other resources (like databases) on Azure or Google Cloud.

Uses Real Programming Languages

Unlike tools like Terraform or CloudFormation, which use specialized configuration files such as HCL for Terraform and JSON/YAML for CloudFormation, Pulumi lets you define infrastructure using general-purpose programming languages such as Python, Typescript, or Golang. For example, if you regularly build web applications in Python, you can also use Python to spin up an EKS cluster, define VPCs, or create S3 buckets. This tight integration between application and infrastructure code reduces the learning curve and accelerates development cycles.

Integrates with Existing Tools

Pulumi integrates with CI/CD pipelines, version control systems, and existing monitoring tools like Prometheus and Datadog. For example, if you're using GitHub Actions to deploy a web app to AWS, Pulumi can be part of that process. When you push new code to GitHub, Pulumi can automatically spin up or update resources like EKS clusters, databases, load balancers, or Kubernetes resources directly by calling the Kubernetes API in the background. This keeps your infrastructure and application in sync without needing to log into AWS and make changes manually.

State Management

Pulumi maintains the state of your infrastructure and provides an automatic mechanism for managing and updating infrastructure deployments. If you deploy an EKS cluster and later want to add or modify some configurations, Pulumi keeps track of those changes and applies them incrementally, reducing the risk of configuration drift.

Automated Deployments

With Pulumi, you can automate the creation, updating, and deletion of cloud resources. This saves hours of work and reduces the chance of mistakes during deployment of your application. To automate the deployment of your EKS cluster, including creating subnets, VPCs, IAM roles, and worker nodes, you just define the setup in code, and Pulumi takes care of the rest.

Infrastructure Visualization

Pulumi provides the capability to visualize your infrastructure, offering a clearer understanding of how your resources depend on each other. In addition to the code that defines your infrastructure, Pulumi gives you a visual map of your cloud resources and how they interact, making it easier to troubleshoot and optimize your setup.

Policy Enforcement

Pulumi supports defining and enforcing policies on your infrastructure, ensuring that resources are created and managed by your organization’s standards and compliance requirements. For example, you can create policies that block the deployment of public-facing EC2 instances unless they have specific security settings, like encryption or strict firewall rules. This ensures that every resource meets security best practices and follows compliance frameworks such as HIPAA or SOC 2.

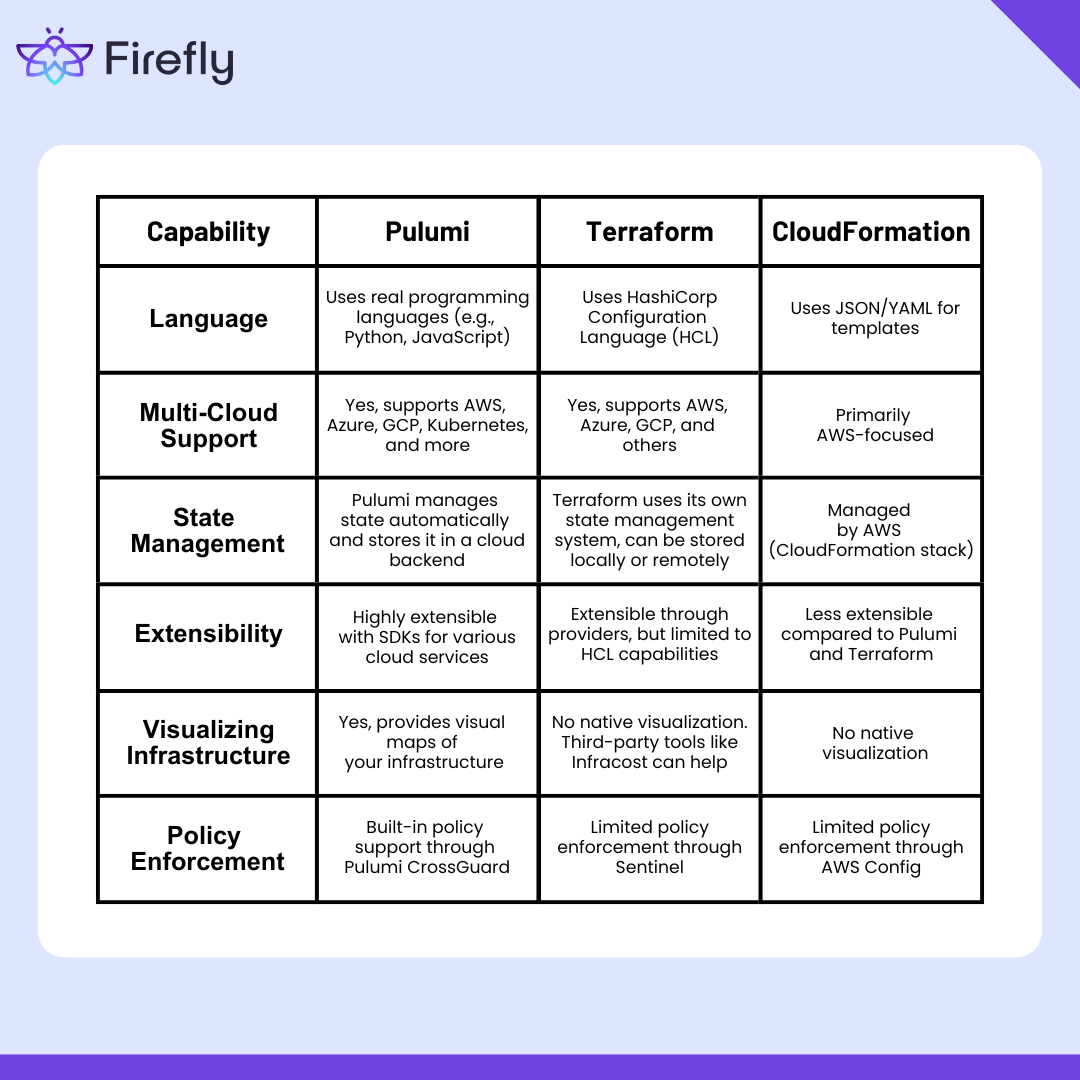

Pulumi vs Terraform vs CloudFormation

Pulumi, Terraform, and CloudFormation are three popular options, each with its strengths and limitations. Understanding how they differ helps you select the best fit for your project. Here’s how Pulumi stands out compared to Terraform and CloudFormation:

Pulumi is a tool that lets you manage cloud infrastructure using regular programming languages like Python or JavaScript. It offers multi-cloud support, automatic state tracking, and security rule enforcement. While Terraform and CloudFormation provide similar features, Pulumi is especially useful for developers who want to manage infrastructure like they write application code.

Benefits of EKS in AWS

Amazon Elastic Kubernetes Service or EKS is a fully managed service that simplifies running Kubernetes clusters on AWS. Kubernetes is a popular tool for automating the deployment, scaling, and management of containerized applications running in different operating systems such as Ubuntu, Amazon Linux, etc. Even though the Kubernetes community is huge, setting up and maintaining Kubernetes can be tricky and time-consuming. EKS removes this complexity by handling the cluster’s control plane for you and seamlessly integrating it with other AWS services.

This means you can focus on running and scaling your kubernetes applications while AWS takes care of the underlying infrastructure, giving you all the power of Kubernetes without the need to manage it yourself.

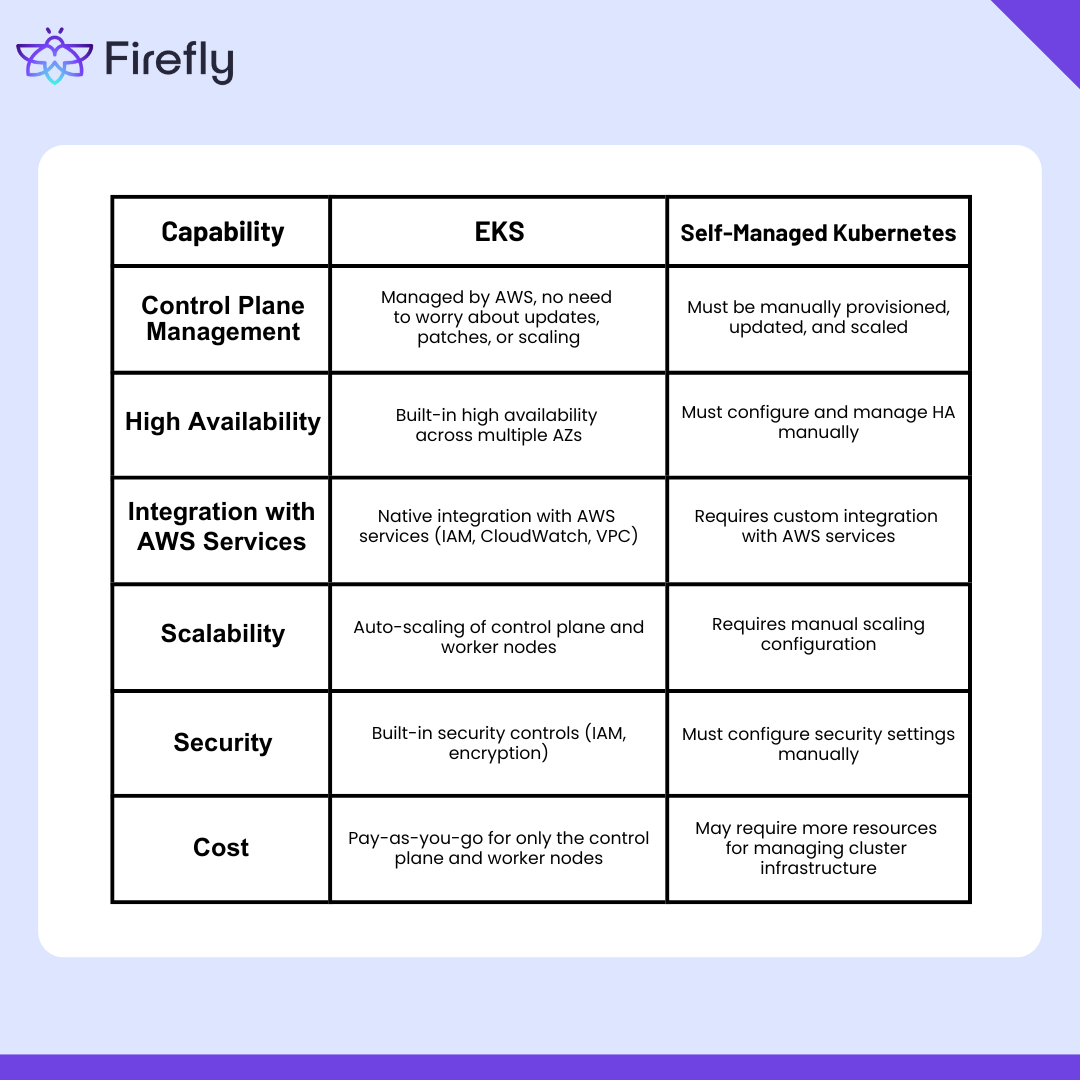

Benefits of Using EKS Over Self-Managed Kubernetes

Here’s a comparison of EKS with self-managed Kubernetes clusters to highlight the benefits of using a managed service:

EKS eliminates the difficult job of setting up and maintaining Kubernetes while providing all AWS's scaling, security, and monitoring features.

Prerequisites for Deploying EKS with Pulumi

Before creating an Amazon EKS cluster with Pulumi, you need to set up a few tools and configure your environment. This setup ensures that Pulumi can interact with AWS, manage Kubernetes, and deploy infrastructure without issues. Let’s go through each step to make sure everything is ready.

Installing and Configuring Pulumi CLI

The Pulumi CLI is the main tool to define and deploy cloud infrastructure. It allows you to write infrastructure as code and run commands to build and manage resources on your AWS account.

To install Pulumi CLI on Windows, download the installer from Pulumi's official site, run the .exe file, and follow the installation prompts. For Mac or Linux systems, run the command:

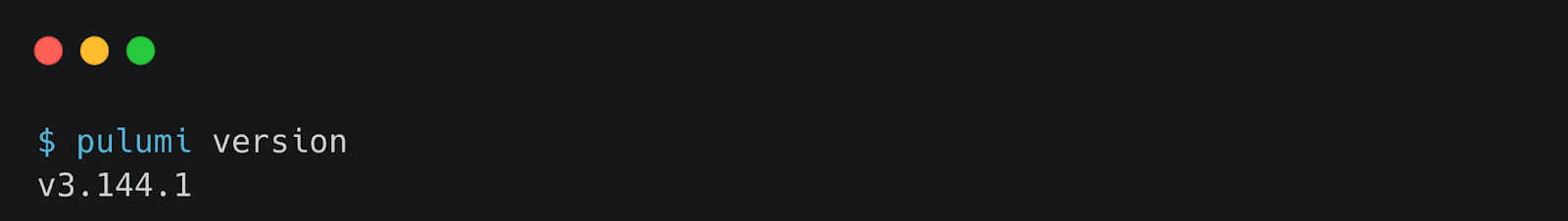

This downloads and installs the Pulumi CLI automatically. Once installed, check if Pulumi is ready to use by running:

This should display the version number, confirming that the CLI is installed.

Installing AWS CLI and Setting Up IAM Permissions

The AWS Command Line Interface lets Pulumi communicate with AWS services. When you run Pulumi commands to deploy an EKS cluster, it uses the AWS CLI in the background to connect with AWS and create the necessary infrastructure.

Download and install the AWS CLI from here. Follow the guide for your operating system. After installing, verify by running:

To allow AWS CLI and Pulumi to interact with AWS services, configure your AWS credentials.

You’ll need to enter AWS Access Key ID, which is like a username for AWS, AWS Secret Access Key, which is like a password, Default region for example, us-east-1 (Virginia) or us-west-2 (Oregon) and set Output format to json or table.

To set up IAM permissions in AWS, go to the IAM section. Create a new IAM user and attach the AdministratorAccess policy. Download the Access Key ID and Secret Access Key and use them during aws configure.

Configuring kubectl CLI

kubectl is a command-line tool used to interact with Kubernetes clusters. Once your EKS cluster is deployed, kubectl allows you to manage it by deploying applications, scaling services, and monitoring its health.

Install the kubectl CLI from Kubernetes' official site and verify the installation with this command:

Python Environment Setup for Pulumi

Pulumi lets you write infrastructure as code in multiple languages, and for this guide, we’ll use Python. This means you need Python installed and configured. Install Python if not already installed from python.org. Ensure to check the box “Add Python to PATH” during installation.

Verify the Python installation:

With these tools and configurations in place, you’re ready to deploy an EKS cluster using Pulumi.

Pulumi Configuration for EKS Cluster Creation

Let us configure Pulumi to create an Amazon EKS cluster. We’ll go step-by-step to set up the project, write the necessary code, and configure key components such as networking, IAM roles, and scaling policies.

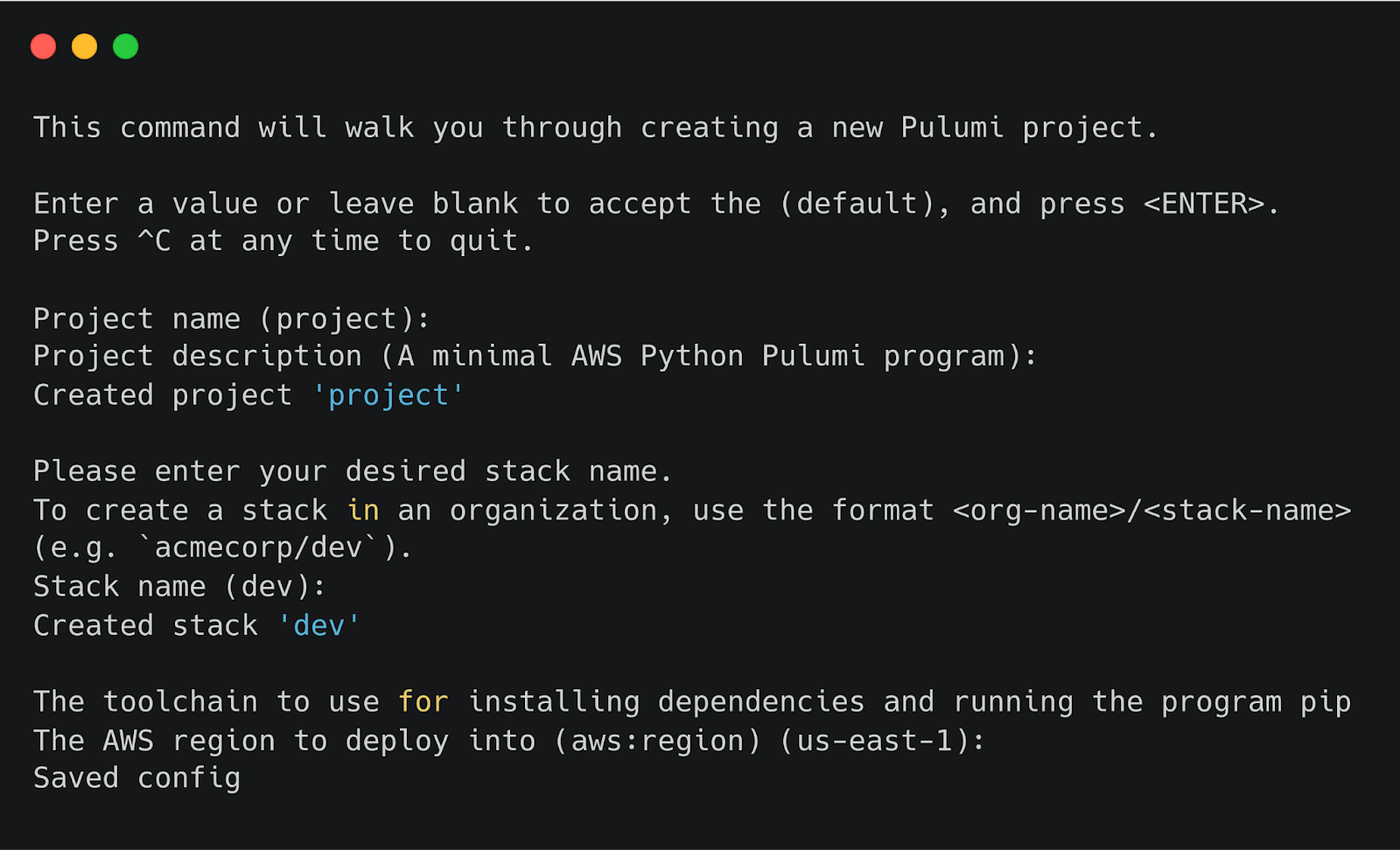

Initializing a New Pulumi Project for AWS

In a Pulumi project, you write all the instructions for building your cloud resources. Think of it like a directory containing the plan you want to create. To get started, open your terminal in the folder where you want to set up the project and run the following command to initialize it:

aws-python specifies that the project will use Python and deploy AWS resources.

Pulumi will ask for some inputs like project name, description, AWS region, and Pulumi stack. Pulumi stack is like an environment such dev, staging, or production environment. You can also keep these values as the default configuration.

Configuring Networking With a VPC

EKS clusters need a VPC to operate securely. In your __main__.py, add the following configuration to create a VPC, subnets, internet gateway, security group, and route tables:

Writing Pulumi Code to Create an EKS Cluster

Now, we define the actual blueprint for the EKS cluster and the required permissions with it:

In role_arn, use the IAM role that gives EKS permissions to manage AWS resources. Also, define the subnets where the cluster will be deployed.

Adding Managed Node Groups and Configuring Scaling Policies

EKS clusters need worker nodes to run applications. Create managed node groups to manage these nodes and attach them with the required policies below:

Adjusts the number of worker nodes through desired_size, min_size and max_size based on application demand:

Deploying the EKS Cluster

Once all configurations are in place, you can run:

This will install the required kubernetes package. Run the following command to create the resources:

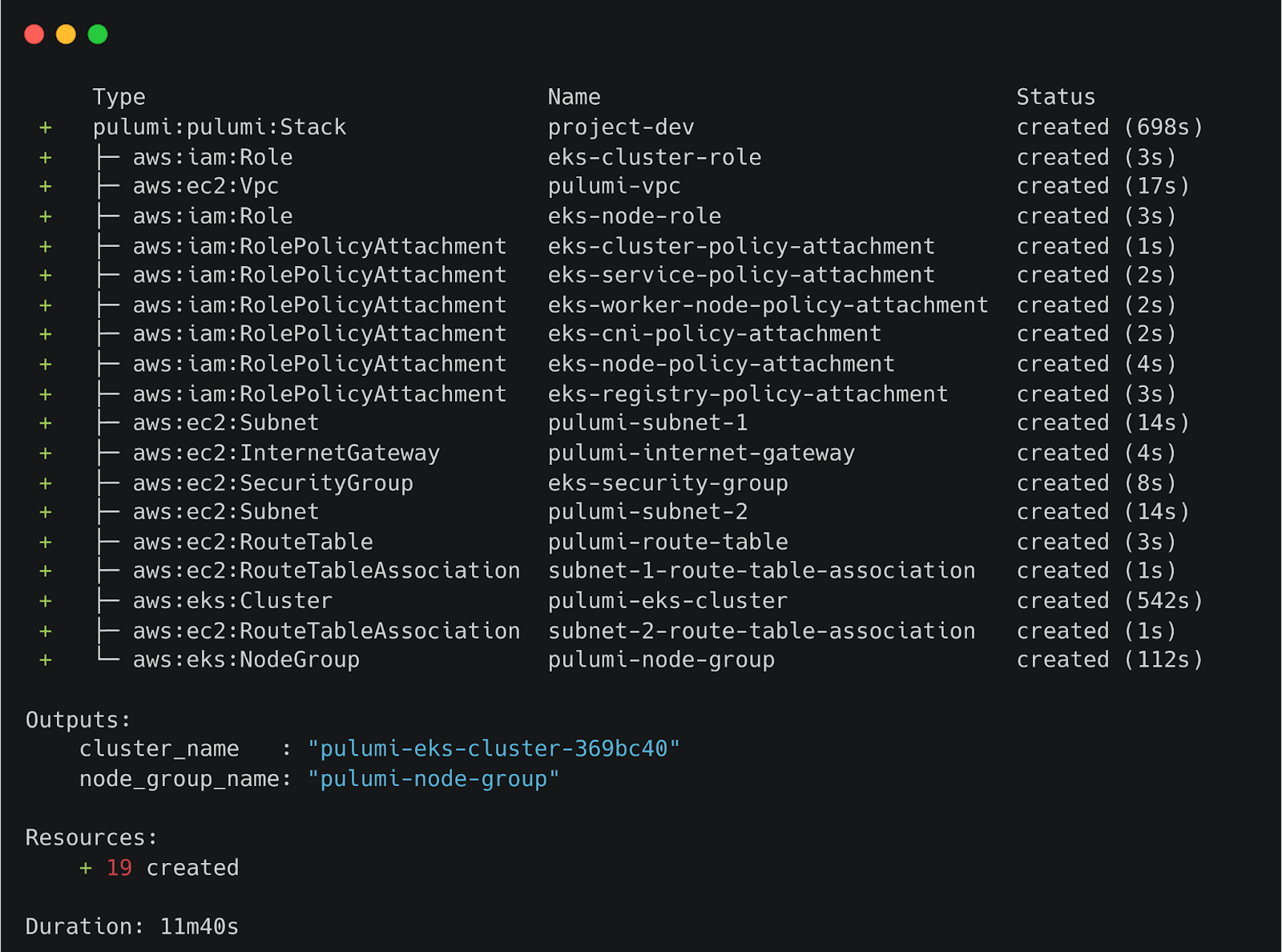

This command will show you the resources that will be created on AWS. Once you approve it, all the resources are created with their name and status.

Once the cluster is deployed on AWS, update your cluster’s kubeconfig locally:

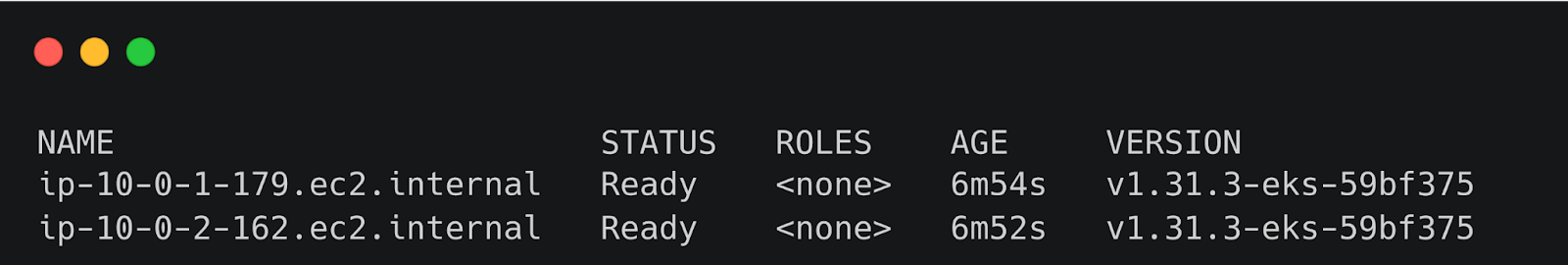

Once deployed, verify the cluster:

This command will print the two nodes you created with Pulumi.

You can delete all these created resources at once whenever you want with the following command:

Disaster Recovery and Backup for EKS

When managing applications on EKS, things can go wrong, such as deletion by mistake, hardware failures, or even misconfigurations. That’s why having a disaster recovery plan is important. Let’s discuss how to back up your EKS cluster and restore it when needed with tools like Velero and Pulumi to automate the process.

Backup Strategies for EKS Clusters and Persistent Data

There are two main things you need to back up. The first is the Kubernetes configuration, which includes EKS configurations, node groups, and IAM roles. The second is persistent data like databases or stateful applications running on your cluster.

Since EKS clusters are often defined in Pulumi, backing up Pulumi code in __main__.py ensures the cluster can be recreated easily. Also, tools like Velero can directly back up kubernetes resources such as pods, services, and deployments. For applications using storage like databases, backup Persistent Volumes to avoid losing critical data.

Using Velero with Pulumi for EKS Backups

Velero is an open-source tool designed to back up and restore Kubernetes clusters, including persistent data. Velero takes snapshots of cluster resources and Persistent Volumes, stores their backup in AWS S3 or other cloud storage, and restores cluster resources and data from these snapshots whenever required.

Start by installing the Velero CLI:

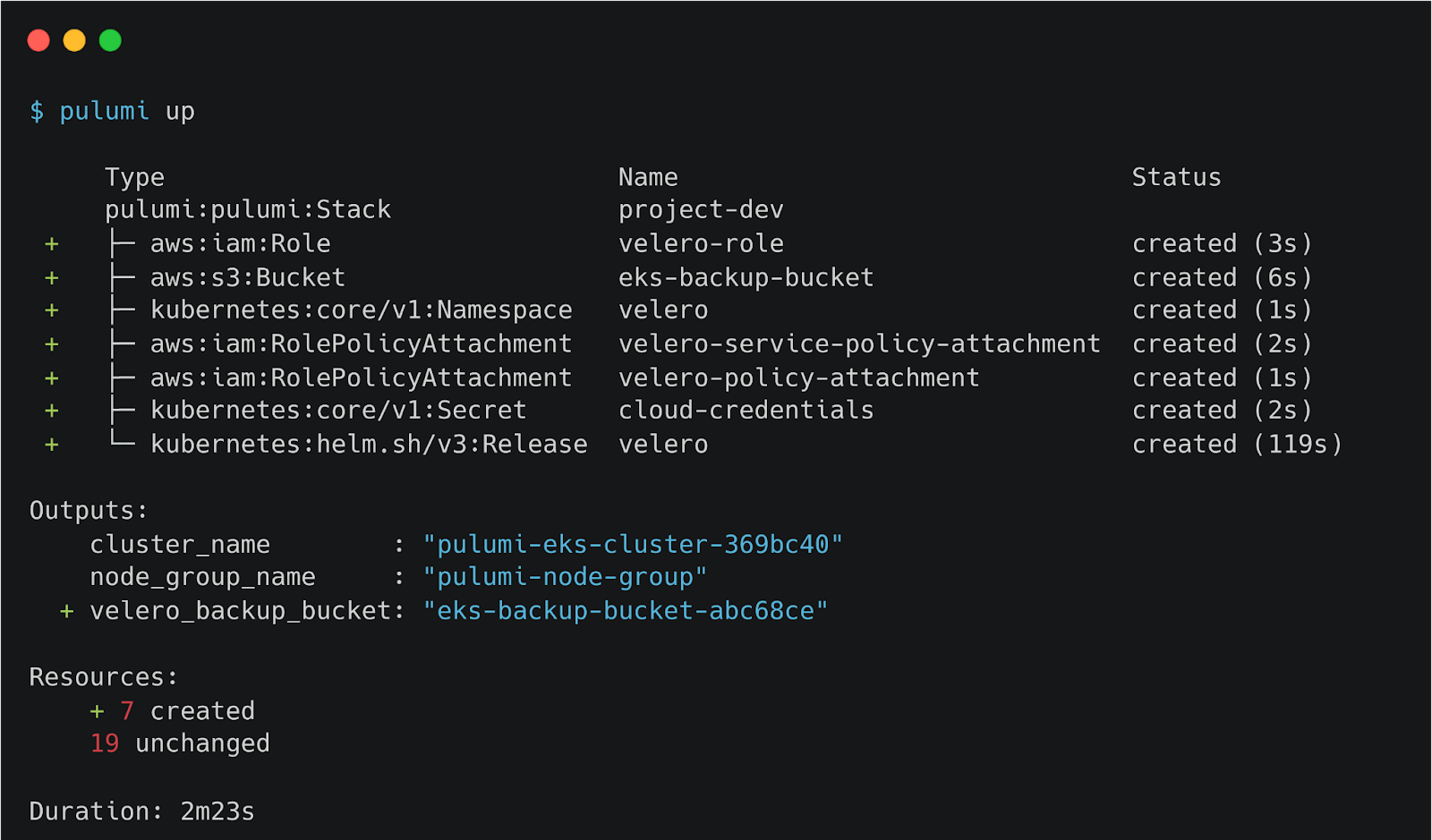

Add this to your Pulumi project:

Replace the values in aws_access_key_id and aws_secret_access_key with your keys. With this configuration, Pulumi installs Vellero in the EKS cluster and creates an S3 bucket for Velero to store the snapshots. Run pulumi up again to make the required changes.

Restoring EKS Clusters and Applications from Backups

Restoring an EKS cluster is like rewinding time to the last backup. Velero lets you restore individual parts (like pods or volumes) or the entire cluster.

Deploy an Nginx deployment or a pod in the cluster:

Take the backup of your cluster with Velero:

Now, delete the pod:

Restoring everything from the backup snapshot:

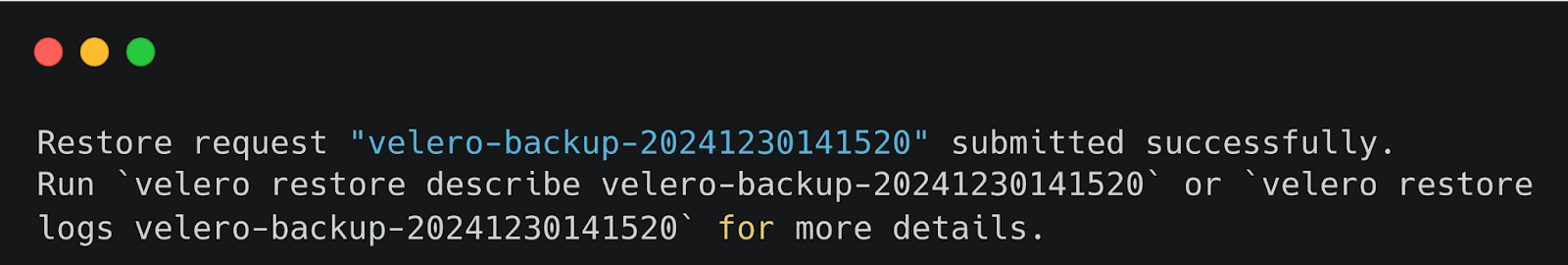

After executing the restore process, you will see the success log of the restore request.

If you want to restore a specific namespace:

If you want to restore persistent volumes only:

Verify the restoration with kubectl:

Firefly for Kubernetes and EKS Management

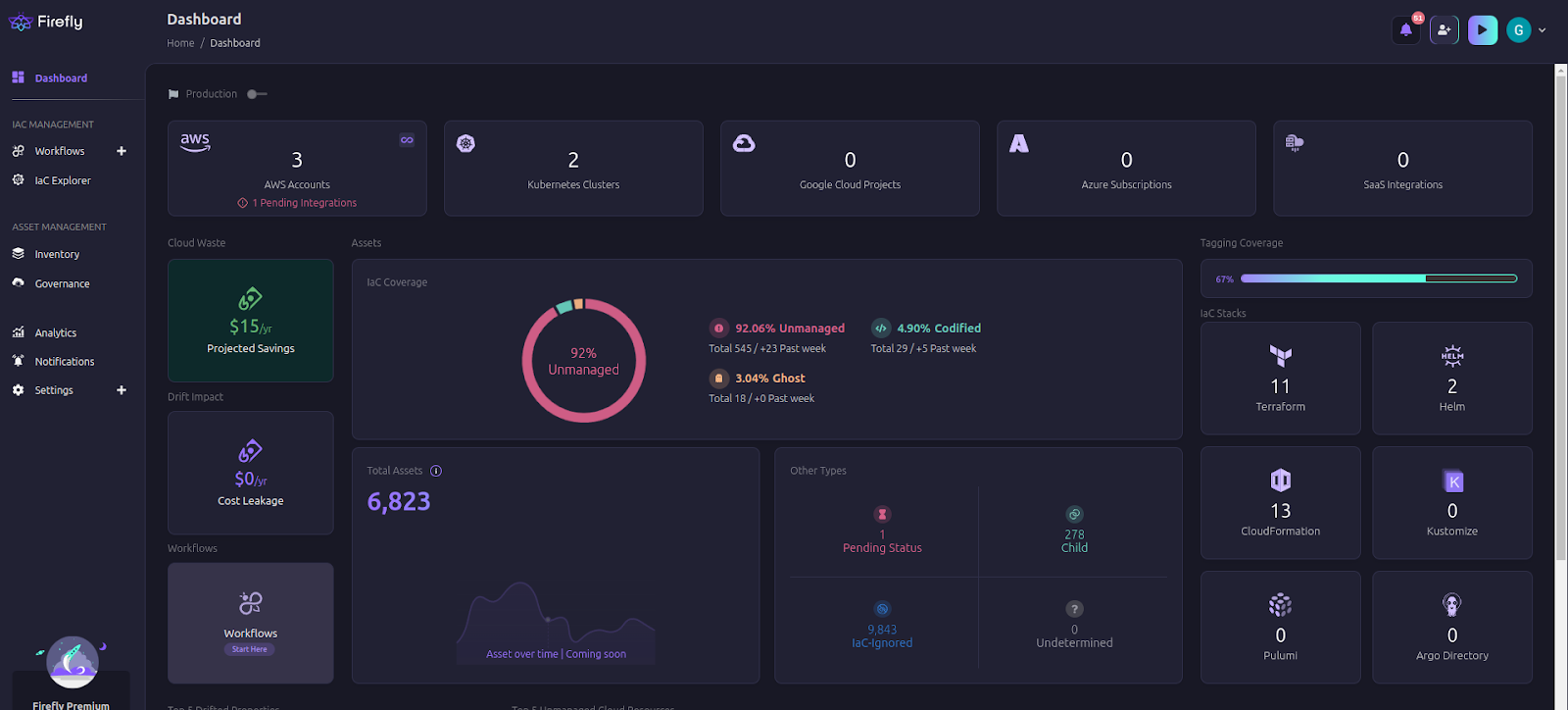

Managing Kubernetes clusters like EKS can get complex as the number of services, pods, and deployments grows. Firefly simplifies this by providing an interactive dashboard that gives a clear view of what’s happening in your clusters. It also helps keep your clusters secure and compliant with industry standards like HIPAA, SOC 2, and GDPR, which is crucial if you're handling sensitive data.

Firefly’s dashboard is like a control center for your EKS cluster. It gives you information regarding cluster health to see which pods are running, failing, or using too many resources. You also get alerts if a Kubernetes deployment accidentally exposes data. Firefly also identifies unused resources and tracks how much money you can save.

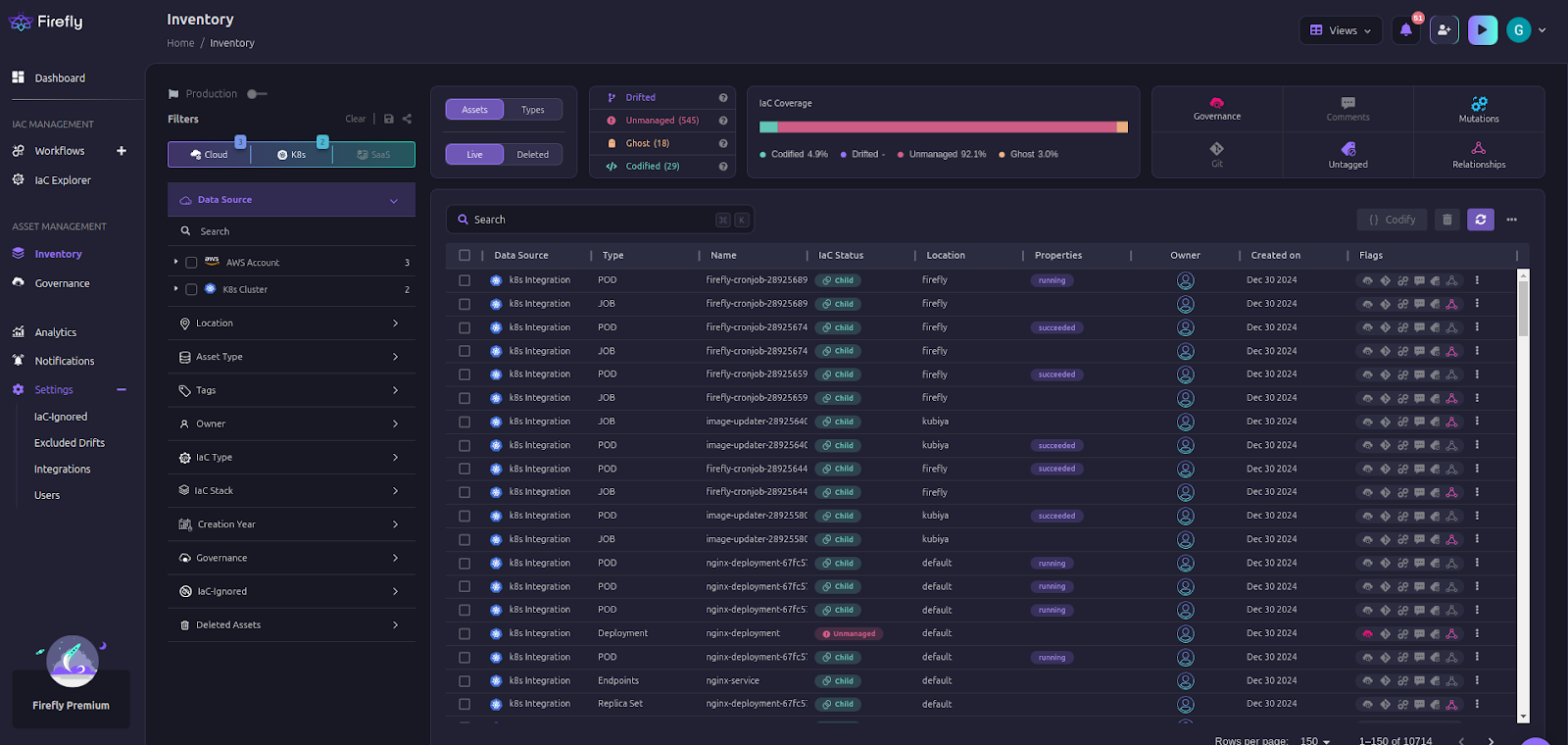

You can check out all your kubernetes resources in Firefly’s inventory.

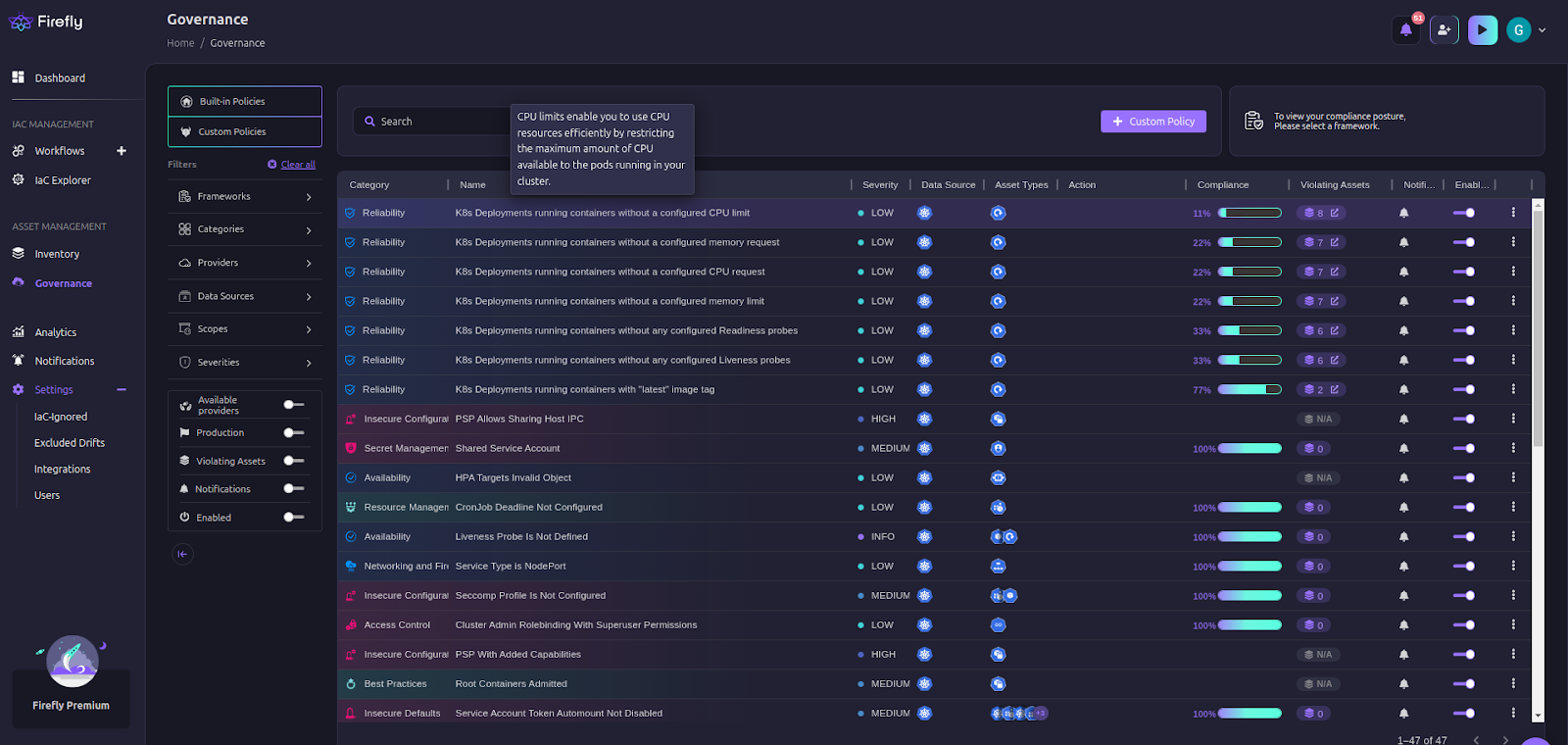

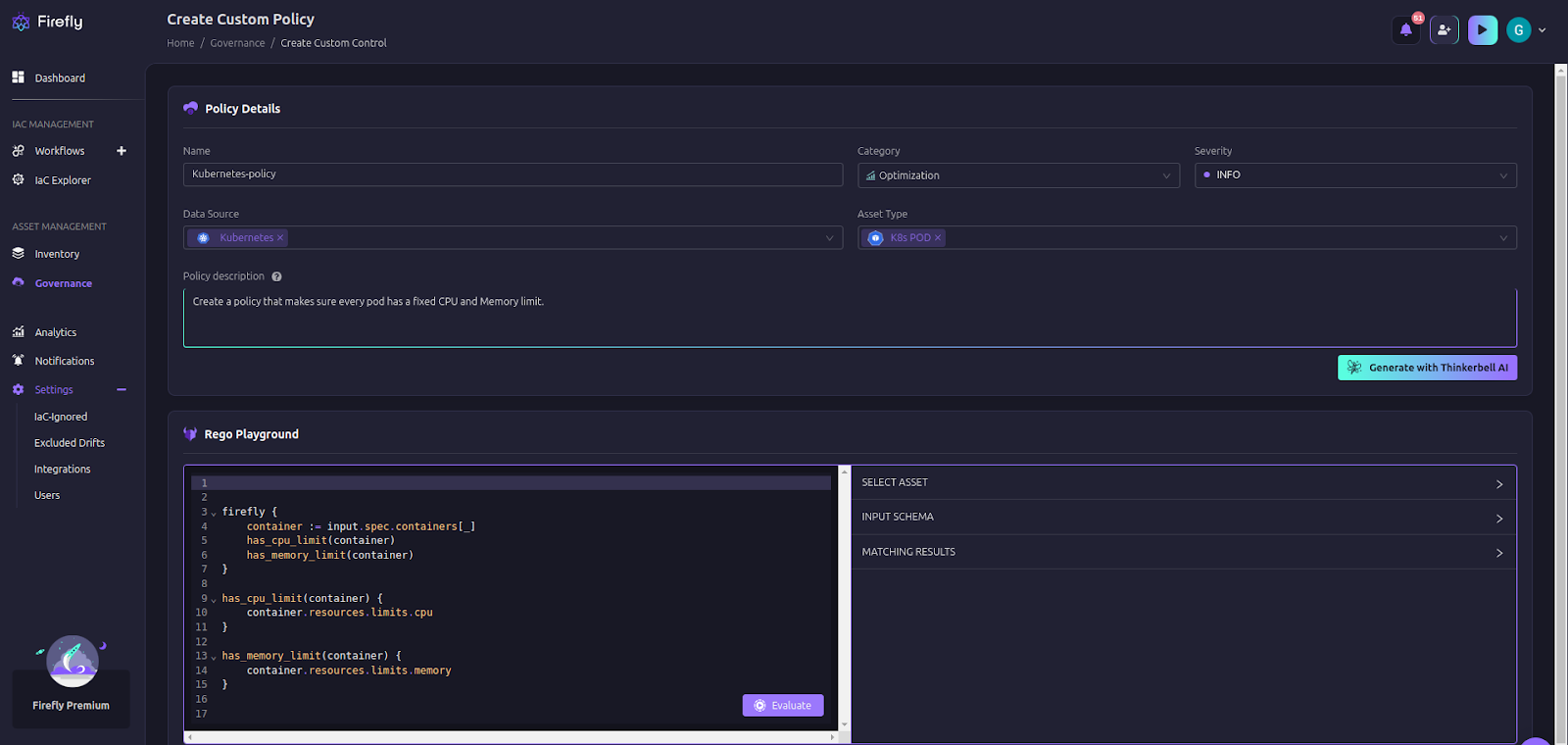

Governance and Security for EKS with Firefly

Governance ensures that everything running in your Kubernetes cluster follows company policies and external regulations like SOC 2 for data security or GDPR for customer privacy.

For example, Firefly can help by checking if pods are publicly exposed, ensuring that all Kubernetes resources have encryption enabled, or verifying IAM roles and permissions aren’t overly permissive.

Instead of manually checking clusters, Firefly lets you define security policies as code. This means you can write rules in Rego that enforce best practices automatically. It is also integrated with Tinkerbell AI, which can write the policy for you.

FAQs

Which is better, Terraform or Pulumi?

It depends on your needs. Terraform is great for declarative infrastructure using its own language, while Pulumi is better if you prefer using programming languages like Python or JavaScript for more flexibility and integration with existing code.

Does Velero backup PV data?

Yes, Velero can back up Persistent Volumes (PV) data along with the rest of your Kubernetes resources, ensuring your applications and storage are protected.

Are EKS and Kubernetes the same?

No, Kubernetes is a platform for managing containerized applications, while EKS is Amazon's managed service that runs and maintains Kubernetes for you on AWS.

Is Pulumi free or paid?

Pulumi offers both free and paid versions. The free version covers most use cases, while the paid version provides additional features like advanced security, policy enforcement, and team collaboration.